I ran in to the the same issue detailed here working with a RKE cluster

https://github.com/metallb/metallb/issues/1154

After looking around for a few hours digging in to the logs i figured out the issue, hopefully this helps some one else our there in the situation save some time.

Make sure the IPVS mode is enabled on the cluster configuration

If you are using :

RKE2 – edit the cluster.yaml file

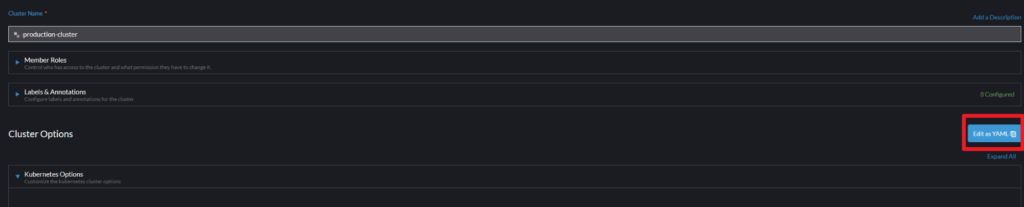

RKE1 – Edit the cluster configuration from the rancher UI > Cluster management > Select the cluster > edit configuration > edit as YAML

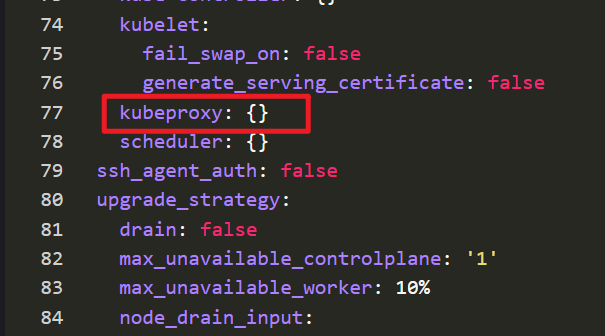

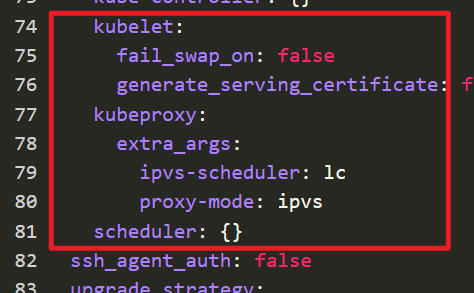

Locate the services field under rancher_kubernetes_engine_config and add the following options to enable IPVS

kubeproxy:

extra_args:

ipvs-scheduler: lc

proxy-mode: ipvs

https://www.suse.com/support/kb/doc/?id=000020035

Default

After changes

Make sure the Kernel modules are enabled on the nodes running control planes

Background

Example Rancher – RKE1 cluster

sudo docker ps | grep proxy # find the container ID for kubproxy

sudo docker logs ####containerID###

0313 21:44:08.315888 108645 feature_gate.go:245] feature gates: &{map[]}

I0313 21:44:08.346872 108645 proxier.go:652] "Failed to load kernel module with modprobe, you can ignore this message when kube-proxy is running inside container without mounting /lib/modules" moduleName="nf_conntrack_ipv4"

E0313 21:44:08.347024 108645 server_others.go:107] "Can't use the IPVS proxier" err="IPVS proxier will not be used because the following required kernel modules are not loaded: [ip_vs_lc]"

Kubproxy is trying to load the needed kernel modules and failing to enable IPVS

Lets enable the kernel modules

sudo nano /etc/modules-load.d/ipvs.conf

ip_vs_lc

ip_vs

ip_vs_rr

ip_vs_wrr

ip_vs_sh

nf_conntrack_ipv4

Install ipvsadm to confirm the changes

sudo dnf install ipvsadm -y

Reboot the VM or the Baremetal server

use the sudo ipvsadm to confirm ipvs is enabled

sudo ipvsadm

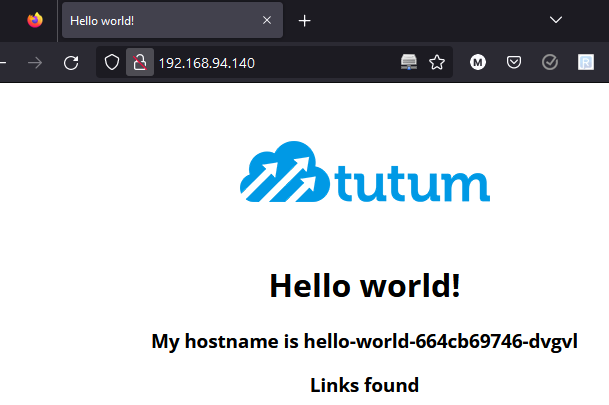

Testing

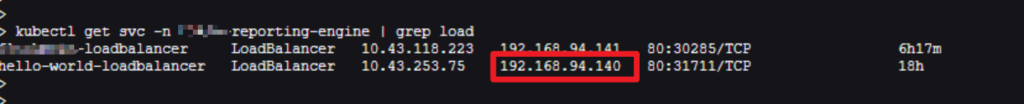

kubectl get svc -n #namespace | grep load

arping -I ens192 192.168.94.140

ARPING 192.168.94.140 from 192.168.94.65 ens192

Unicast reply from 192.168.94.140 [00:50:56:96:E3:1D] 1.117ms

Unicast reply from 192.168.94.140 [00:50:56:96:E3:1D] 0.737ms

Unicast reply from 192.168.94.140 [00:50:56:96:E3:1D] 0.845ms

Unicast reply from 192.168.94.140 [00:50:56:96:E3:1D] 0.668ms

Sent 4 probes (1 broadcast(s))

Received 4 response(s)

If you have the service type load balancer on a deployment now you should be able to reach it if the container is responding on the service

helpful Links

https://metallb.universe.tf/configuration/troubleshooting/

https://github.com/metallb/metallb/issues/1154

https://github.com/rancher/rke2/issues/3710